Ingress Termination

The Shivering-Isles Infrastructure, given it's a local-first infrastructure has challenges to optimise traffic flow for local devices, without breaking external access.

TCP Forwarding

A intentional design decision was to avoid split DNS. Given that all DNS is hosted on Cloudflare with full DNSSEC integration, as well as running devices with active DoT always connecting external DNS Server, made split-DNS a bad implementation.

At the same time, a simple rerouting of all traffic to the external IP would also be problematic, as it would require either a dedicated IP address or complex source-based routing to only route traffic for client networks while allowing VPN traffic to continue to flow to the VPS.

The solution most elegant solution found was to reroute traffic on TCP level. Allow high volume traffic on port 443 to be rerouted using a firewall rule, while keeping the remote IP identical and not touching any VPN or SSH traffic in the process.

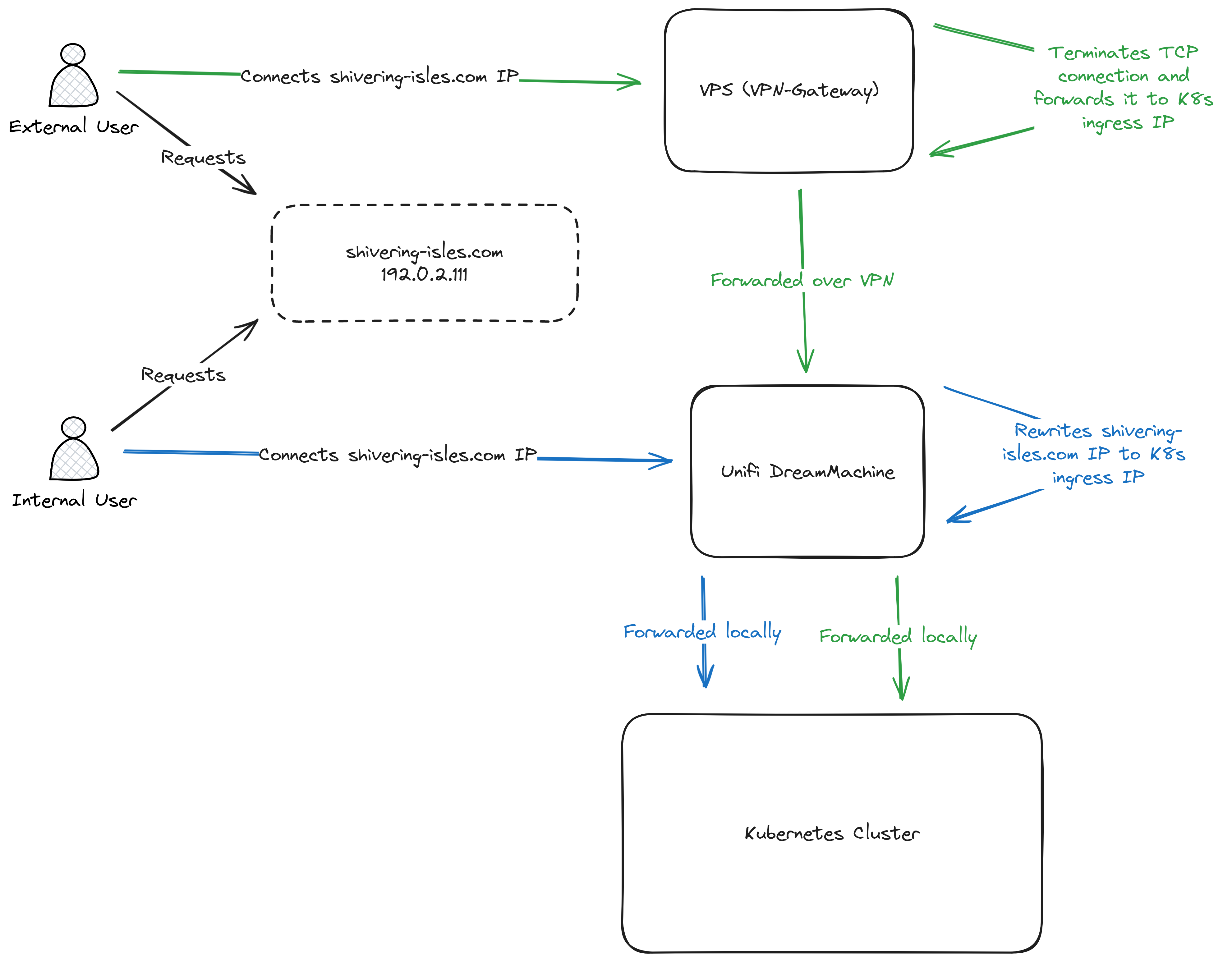

A request for the same website looks like this:

In both cases the connections are terminated on the Kubernetes Cluster. The external user reaches the VPS and is then rerouted over VPN. The local user is rerouted before the connection reaches the internet, resulting in keeping all traffic locally.

Since only TCP connections are forward at any point all TLS termination takes place on the Kubernetes cluster regardless.

Setting up local redirects

In order to redirect traffic in Kubernetes, the services with external connectivitly get the IPs of the external loadbalancer as externalIPs in the kubernetes services.

On the UDM as well as other clients on the same subnet as the Kubernetes cluster, the following script is used (assuming internal-k8s.example.com external-k8s.example.com as targets):

#!/bin/sh

TARGET_DNS="external-k8s.example.com"

DESTINATION_DNS="internal-k8s.example.com"

TARGET_IP="$(nslookup "$TARGET_DNS" | grep 'Address:' | tail -1 | sed -e 's/Address: //g')"

DESTINATION_IP="$(nslookup "$DESTINATION_DNS" | grep 'Address:' | tail -1 | sed -e 's/Address: //g')"

iptables -t nat -A PREROUTING --destination "$TARGET_IP" -p tcp --dport 443 -j DNAT --to-destination "$DESTINATION_IP:443"

iptables -t nat -A OUTPUT --destination "$TARGET_IP" -p tcp --dport 443 -j DNAT --to-destination "$DESTINATION_IP:443"

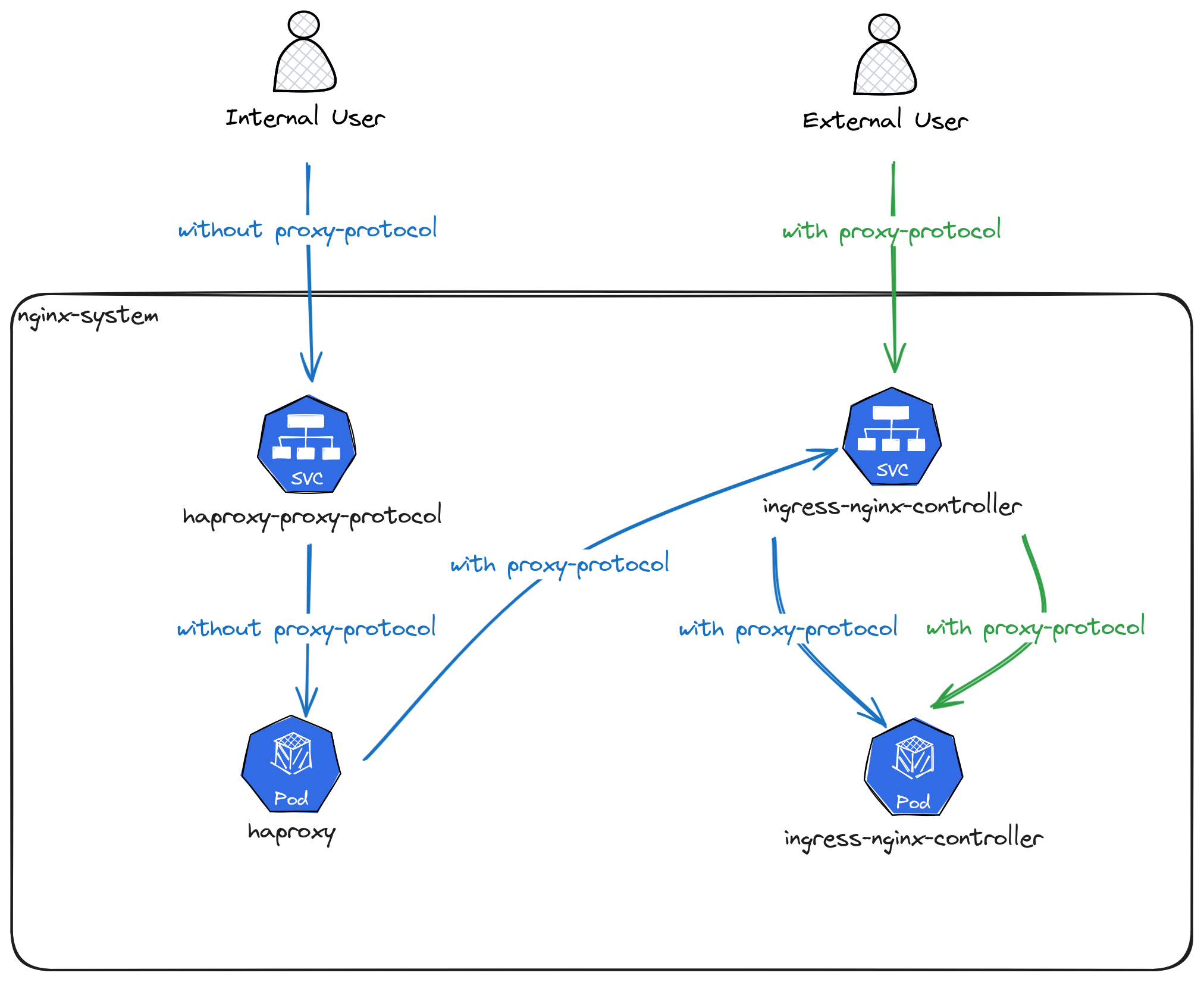

Preserving source IP addresses

On the VPS, the TCP connection is handled by an HAProxy instance that speaks proxy-protocol with the Kubernetes ingress service.

On the Unifi Dream Machine it's a simple iptables rule, which redirects the traffic. In order to also use proxy-protocol with the ingress service, it's actually redirected to an HAProxy running in the Kubernetes cluster besides the ingress-nginx. This is mainly due to the limitation in ingress-nginx that doesn't allow mixed proxy-protocol and non-proxy-protocol ports without using custom configuration templates.